This article introduces how to use Alibaba Cloud AMD CPU Cloud Server (g8a) and Dragon Lizard Container Mirroring, and builds a personal version of Visual AI Service Assistant based on Tongyi Qianwen Qwen-VL-Chat.

Qwen-VL is a Large Vision Language Model developed by Alibaba Cloud. Qwen-VL can use images, text, detection boxes as input, and text and detection boxes as output. On the basis of Qwen-VL, the alignment mechanism is used to create a visual AI assistant Qwen-VL-Chat based on a large language model. It supports more flexible interaction methods, including multi-picture, multi-round Q&A, creation and other capabilities. It naturally supports English, Chinese and other multilingual dialogues, and supports multi-picture input and Comparison, designated picture questions and answers, multi-picture literary creation, etc.

Important:

The Qwen-VL-Chat model is open source according to LICENSE, and free commercial use needs to fill in the commercial authorization application. You should consciously abide by the user agreement, usage norms and relevant laws and regulations of the third-party model, and bear the relevant responsibility for the legality and compliance of the use of the third-party model.

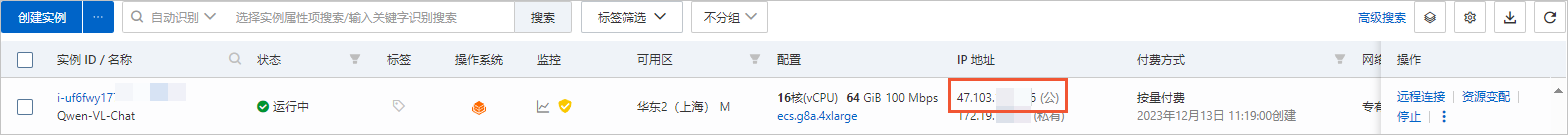

Create an ECS instance

- Go to the instance creation page.

- Follow the interface prompts to complete the parameter configuration and create an ECS instance.The parameters that need attention are as follows. For the configuration of other parameters, please refer to the custom purchase instance.

- Example: The reasoning process of the Qwen-VL-Chat model requires a large amount of computing resources and occupies a lot of memory at runtime. In order to ensure the stability of the model operation, the instance specification needs to select at least ecs.g8a.4xlarge (64 GiB memory).

- Mirror: Alibaba Cloud Linux 3.2104 LTS 64-bit.

- Public network IP: Select to assign the public network IPv4 address, select the bandwidth billing mode according to the traffic used, and set the peak bandwidth to 100 Mbps. To speed up the download of the model.

- Add security group rules.Add security group rules in the entry direction of the ECS instance security group and release ports 22, 443 and 7860 (used to access WebUI services). For specific operations, please refer to Adding Security Group Rules.

- After the creation is completed, get the public network IP address on the ECS instance page.

Create a Docker running environment

- Connect to the ECS instance remotely.For specific operations, please refer to Logging in to Linux Instance through Password or Key Authentication.

- Install Docker. For specific operations, please refer to Installing Docker in Alibaba Cloud Linux 3 instance.

- Create and run the PyTorch AI container.The Dragon Lizard Community provides a wealth of container images based on Anolis OS, including PyTorch images optimized for AMD. You can directly use this mirror image to create a PyTorch running environment

sudo docker pull registry.openanolis.cn/openanolis/pytorch-amd:1.13.1-23-zendnn4.1 sudo docker run -d -it --name pytorch-amd --net host -v $HOME:/root registry.openanolis.cn/openanolis/pytorch-amd:1.13.1-23-zendnn4.1Deploy Qwen-VL-Chat

Manual deployment and

automated deployment

Step-1: Install the software required to configure the model

- Enter the container environment.

sudo docker exec -it -w /root pytorch-amd /bin/bashImportant后续命令需在容器环境中执行,如意外退出,请使用以上命令重新进入容器环境。如需查看当前环境是否为容器,可以执行cat /proc/1/cgroup | grep docker查询(有回显信息则为容器环境)。 - Install the software required to deploy Qwen-VL-Chat.

yum install -y git git-lfs wget tmux gperftools-libs anolis-epao-release - Enable Git LFS.Downloading the pre-training model requires the support of Git LFS.

git lfs install

Step-2: Download the source code and model

- Create a tmux session.

tmux- Description: It takes a long time to download the pre-training model, and the success rate is greatly affected by the network situation. It is recommended to download it in the tmux session to avoid the interruption of the download model due to the disconnection of ECS.

- Download the source code of the Qwen-VL-Chat project and the pre-training model.

git clone https://github.com/QwenLM/Qwen-VL.gitgit clone https://www.modelscope.cn/qwen/Qwen-VL-Chat.gitqwen-vl-chat

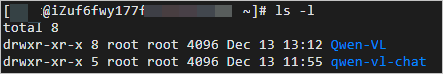

- Check the current directory.

ls -lAfter the download is completed, the current directory is displayed as follows.

Step-3: Deploy the running environment

- Change the pip download source.Before installing the dependency package, it is recommended that you change the pip download source to speed up the installation.

- Create a pip folder.

mkdir -p ~/.config/pip - Configure pip to install the mirror source.

cat > ~/.config/pip/pip.conf <<cat > ~/.config/pip/pip.conf <<EOF[global]

index-url=http://mirrors.cloud.aliyuncs.com/pypi/simple/

[install]

trusted-host=mirrors.cloud.aliyuncs.com

EOFEOF

- Create a pip folder.

Install Python to run dependencies.

yum install -y python3-transformers python-einops

pip install tiktoken transformers_stream_generator accelerate gradio

cat > /etc/profile.d/env.sh <<EOF

export OMP_NUM_THREADS=\$(nproc --all)

export GOMP_CPU_AFFINITY=0-\$(( \$(nproc --all) - 1 ))

EOF

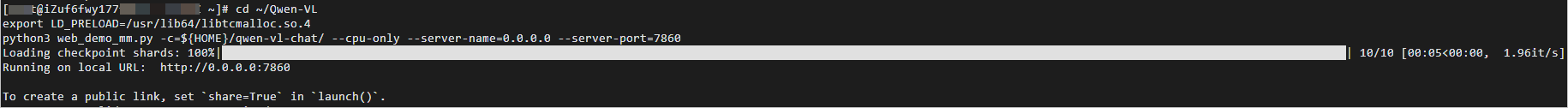

source /etc/profileStep-4: Conduct AI dialogue

- Execute the following command to turn on the WebUI service.

cd ~/Qwen-VL export LD_PRELOAD=/usr/lib64/libtcmalloc.so.4 python3 web_demo_mm.py -c=${HOME}/qwen-vl-chat/ --cpu-only --server-name=0.0.0.0 --server-port=7860When the following information appears, it means that the WebUI service has been successfully started.

- 在浏览器地址栏输入

http://<ECS公网IP地址>:7860,进入Web页面。 - Click Upload to upload pictures, and then enter the content of the dialogue in the Input dialog box. Click Submit to start the picture Q&A, picture detection box annotation, etc.

The following example is far from the limit of Qwen-VL-Chat’s ability. You can further explore the ability of Qwen-VL-Chat by replacing different input images and prompts.

Read other articles in our Blog.